Kathleen C. Fraser

Associate Professor, School of Electrical Engineering and Computer Science, University of Ottawa

My research is in the area of natural language processing (NLP) and artificial intelligence (AI). I'm particularly interested in issues related to ethical AI and AI safety, including bias and fairness, social stereotypes, deception, manipulation, and model evaluation. A large part of my research also focuses on NLP for healthcare, particularly the detection of early signs of cognitive impairment from speech and language features.

News and Announcements

Current Projects

Feel free to get in touch for more information or possible collaboration.

Cognitive Decline

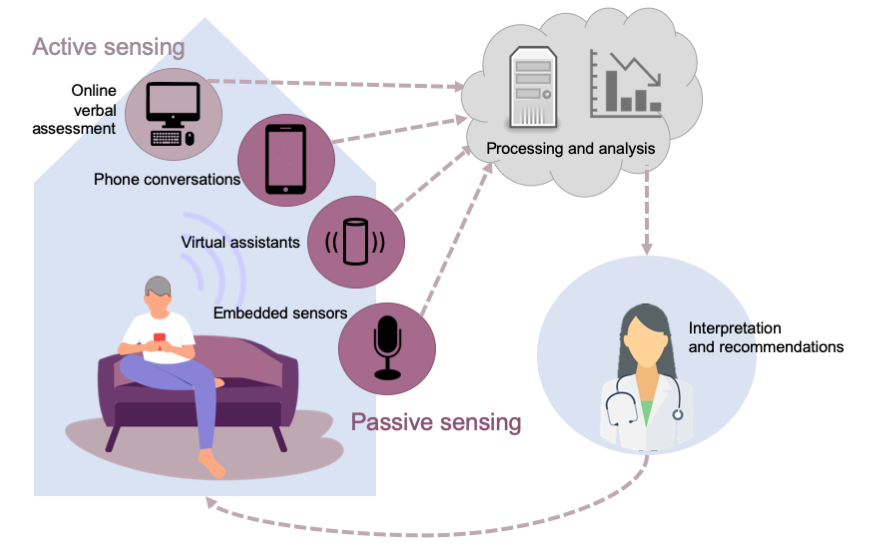

Algorithms to automatically detect speech and language markers of cognitive or mental state.

Stereotyping and Bias

Examining stereotyping and bias in social media text and in machine learning models.

AI Safety

Evaluating frontier AI models and fine-tuned models for safety risks, and designing mitigations.

Selected Publications

For an up-to-date list of publications, please see my Google Scholar page.

Examining Gender and Racial Bias in Large Vision-Language Models Using a Novel Dataset of Parallel Images

Fraser & Kiritchenko (2024)

Aporophobia: An Overlooked Type of Toxic Language Targeting the Poor

Kiritchenko, Curto, Nejadgholi, & Fraser (2023)

A Friendly Face: Do Text-to-Image Systems Rely on Stereotypes when the Input is Under-Specified?

Fraser, Nejadgholi, & Kiritchenko (2023)

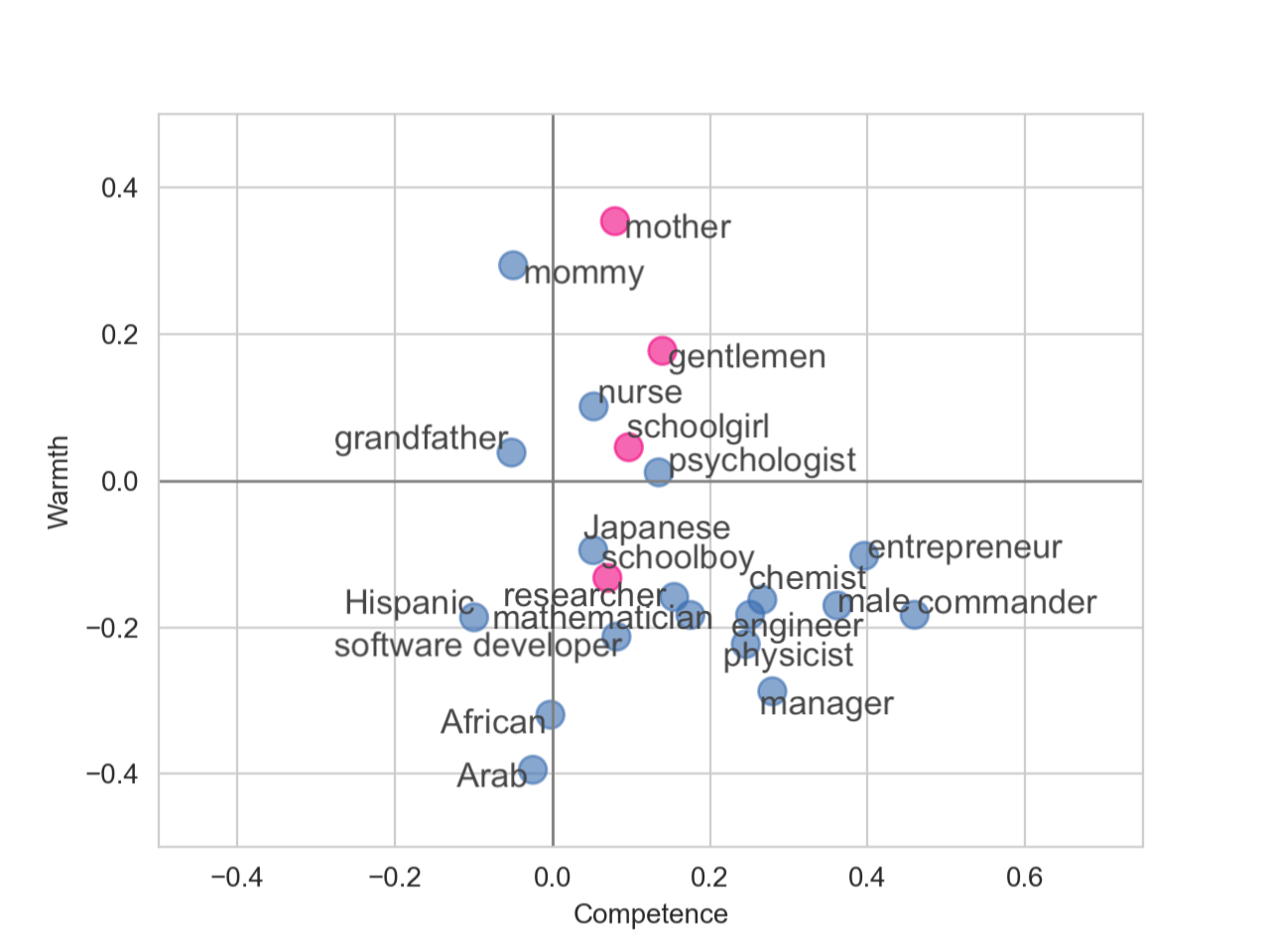

Computational Modeling of Stereotype Content in Text

Fraser, Kiritchenko, & Nejadgholi (2022)

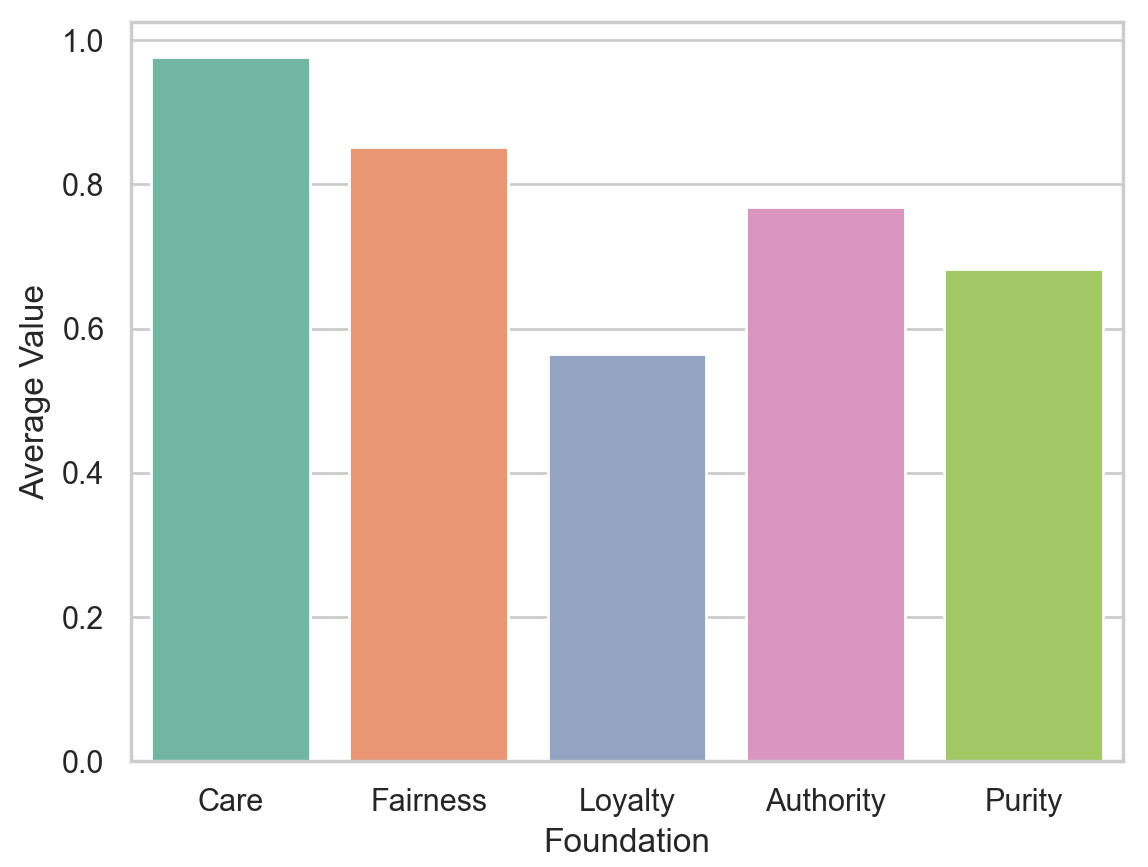

Does Moral Code Have a Moral Code? Probing Delphi's Moral Philosophy

Fraser, Kiritchenko,& Balkir (2022)

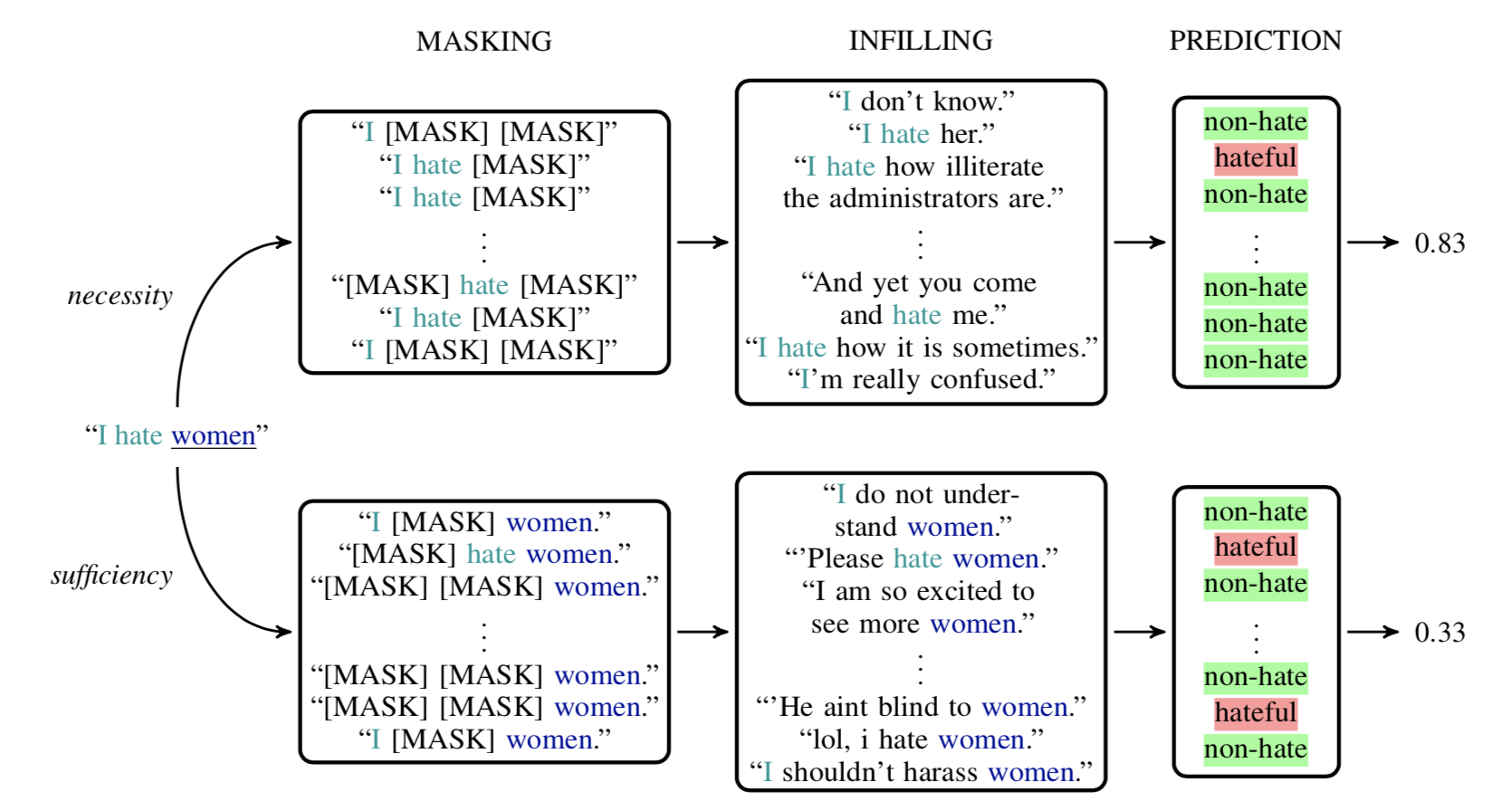

Necessity and Sufficiency for Explaining Text Classifiers: A Case Study in Hate Speech Detection

Balkir, Nejadgholi, Fraser, & Kiritchenko (2022)

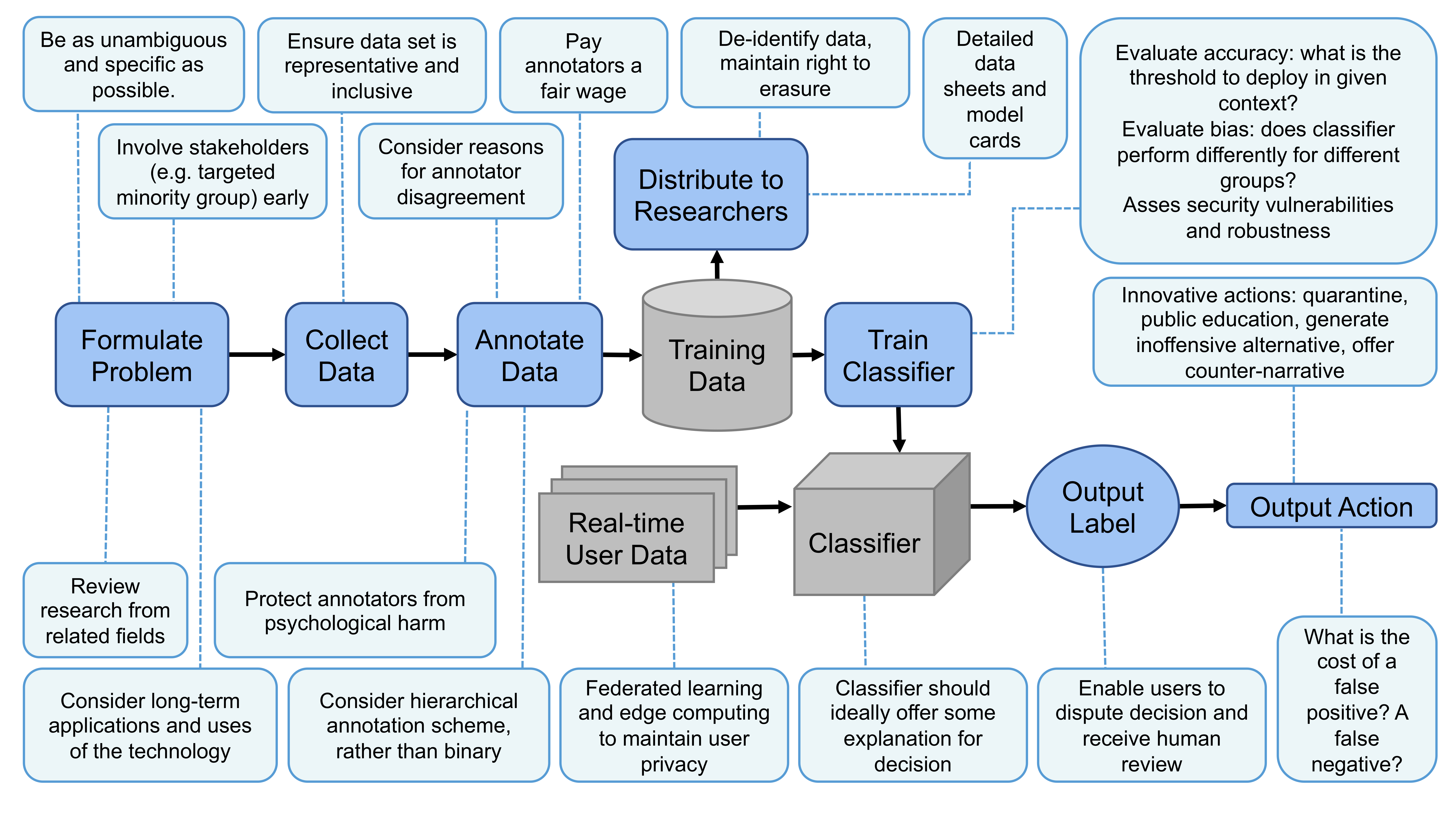

Confronting abusive language online: A survey from the ethical and human rights perspective

Kiritchenko, Nejadgholi, & Fraser (2021)

Measuring Cognitive Status from Speech in a Smart Home Environment

Fraser & Komeili (2021)

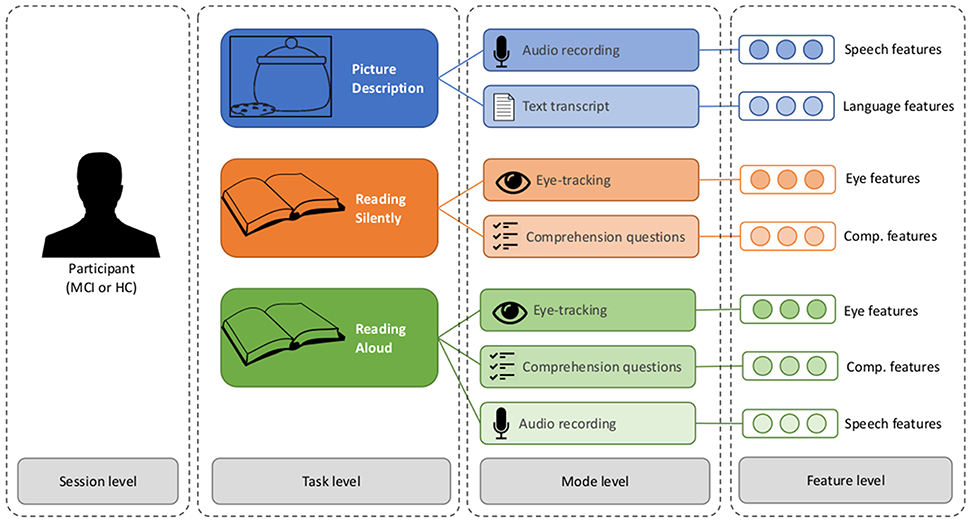

Predicting MCI Status From Multimodal Language Data Using Cascaded Classifiers

Fraser, Lundholm Fors, Eckerström, Öhman, & Kokkinakis (2019)

How do we feel when a robot dies? Emotions expressed on Twitter before and after hitchBOT’s destruction

Fraser, Zeller, Smith, Mohammad, & Rudzicz (2019)

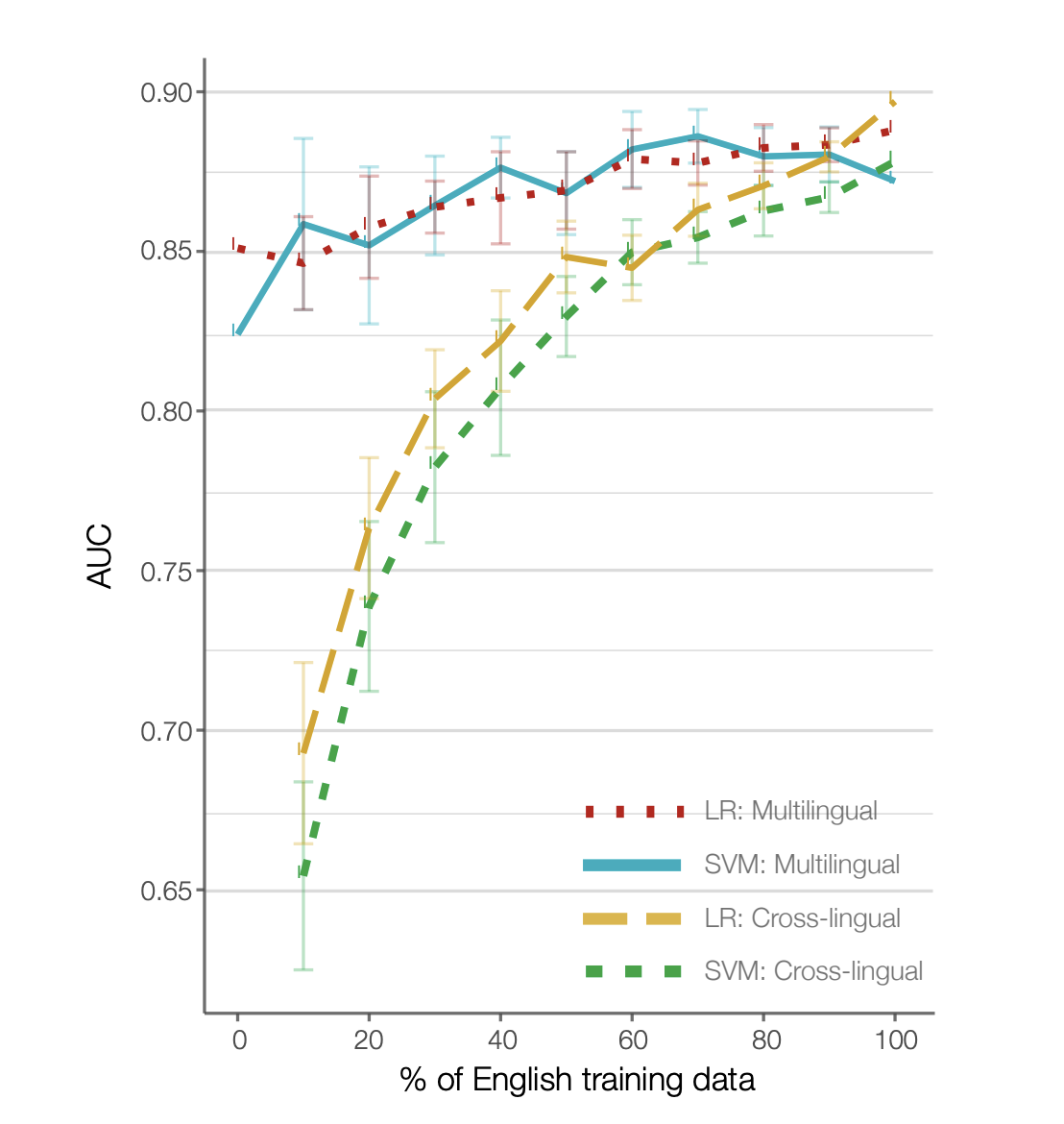

Multilingual Prediction of Alzheimer’s Disease Through Domain Adaptation and Concept-Based Language Modelling

Fraser et al. (2019)

An analysis of eye-movements during reading for the detection of mild cognitive impairment

Fraser, Lundholm Fors, Kokkinakis, & Nordlund (2017)

About

Academic bio: Dr. Kathleen Fraser is a Research Officer in the Text Analytics group at the National Research Council in Ottawa, where she investigates language technologies for healthcare and social good. Her recent research has focused on social and ethical issues in natural language processing, such as identifying stereotypes and implicitly abusive language in social media text, as well as improving the interpretability and transparency of machine learning models. She is also active in developing methods to help detect early signs of cognitive decline from speech patterns and eye movements. Dr. Fraser completed a post doc at the University of Gothenburg, Sweden in 2018, where she worked on detecting mild cognitive impairment with behavioural markers and multimodal machine learning. She received her PhD in Computer Science from the University of Toronto in 2016, under the supervision of Graeme Hirst and Jed Meltzer, and was awarded the Governor General's Gold Academic Medal.